Prerequisites

- Install and configure hadoop

1.Download Apache Spark

- https://spark.apache.org/downloads.html

- Under the Download Apache Spark heading, there are two drop-down menus. Use the current non-preview version.

- In our case, in Choose a Spark release drop-down menu select 2.4.5

- In the second drop-down Choose a package type, leave the selection Pre-built for Apache Hadoop 2.7

- Click the spark-2.4.5-bin-hadoop2.7.tgz link

02. Create Folder path ‘C:\Spark’ and Extrcat the downloaded Spark file from ‘Download’ folder to ‘C:\Spark’

03. Set in the ‘Environment Variable’

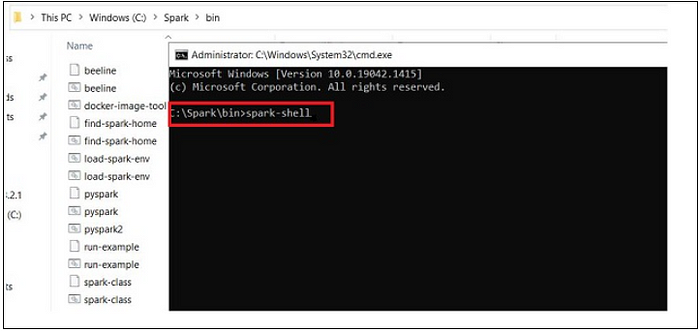

04. Launch Spark

- Open a new command-prompt window using the right-click and Run as administr

- Run below the command ‘spark-shell’ from C:\Spark\bin

4.1 Finally, the Spark logo appears, and the prompt displays the Scala shell.

4.2 Open a web browser and navigate to http://localhost:4040/

4.3 To exit Spark and close the Scala shell, press ctrl-d in the command-prompt window.

4.4 Start Spark in ‘Pyspark’ as Shell

The PySpark shell is responsible for linking the python API to the spark core and initializing the spark context.

5. Start Master and Slave

- Setup and Run Spark Master and Save on the Machine (Standalone)

- Run Master

- — — Open the ‘command Prompt’ from the path ‘C:\Spark\bin’

- — — Run Below the command

C:\Spark\bin>spark-class2.cmd org.apache.spark.deploy.master.Master

C:\Spark\bin>spark-class org.apache.spark.deploy.master.Master

- Access master’s web UI on the Url http://localhost:8080/

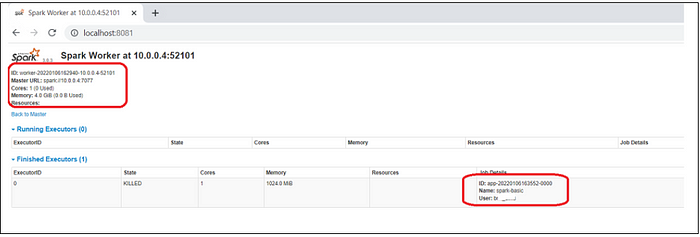

2. Run Slave

- Open the command Prompt from the path ‘C:\Spark\bin’

- Run Below the command

C:\Spark\bin>spark-class2.cmd org.apache.spark.deploy.worker.Worker -c 1 -m 4G spark://10.0.0.4:7077

- Access Slave’s web UI on the Url http://localhost:8081/

Note : Make Sure Master and Slave Command Prompt are running

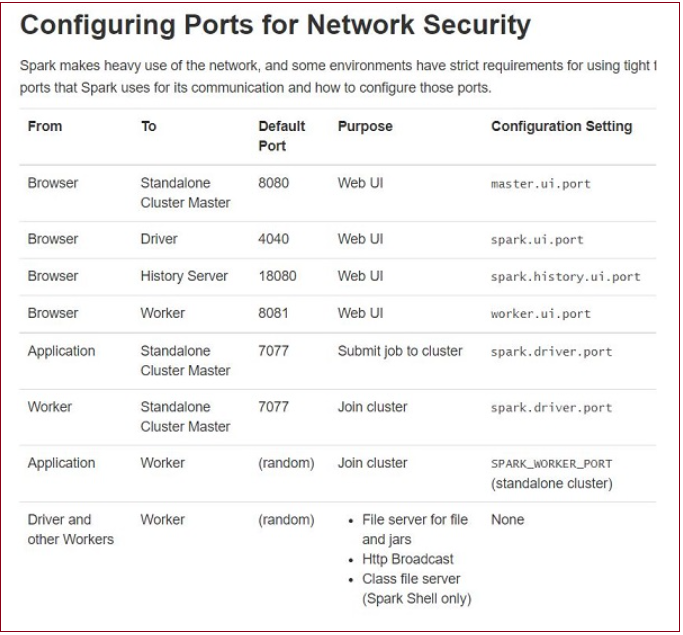

6. Web GUI

- Apache Spark provides suite Web UI for monitor the status of your Spark/PySpark application, resource consumption of

Spark cluster, and Spark configurations. - Apache Spark Web UI

— Jobs

— Stages

— Tasks

— Storage

— Environment

— Executors

— SQL

Open a web browser and navigate to http://localhost:4040/

Note : Master and Slave should be started

Create A python program as below and save it as spark_basic.py on the desktop

- spark_basic.py

import findspark

findspark.init('C:\Spark')

from pyspark import SparkConf

from pyspark import SparkContext

conf = SparkConf()

conf.setMaster('spark://10.0.0.4:7077') # Mention the Master Node

conf.setAppName('spark-basic')

sc = SparkContext(conf=conf)

def mod(x):

import numpy as np

return (x, np.mod(x, 2))

rdd = sc.parallelize(range(1000)).map(mod).take(10)

print(rdd)

Refresh the master WebGUI

Refresh the Slave WebGUI

Note: Make Sure While Running Spark Application (Code from python File) Master and Slave are runnning