Managing permissions and data governance in Microsoft Power BI cloud (Power BI Service) across multiple divisions and business areas (like Finance, Technical BI, Customer BI) requires a structured, scalable, and secure model that aligns with both organizational structure and data security policies.

Below is a step-by-step best practice approach, starting with the Finance department, and expanding to other departments.

🔐 Step 1: Establish Governance Framework

a. Define Roles and Responsibilities

- Data Owners (e.g., Treasury lead): approve access to datasets and reports.

- Data Stewards: maintain data quality, define business terms.

- Power BI Admins: manage workspace structure, monitor usage, enforce policies.

- Report Authors: develop dashboards and reports.

- Consumers/Business Users: view and interact with reports.

🏗️ Step 2: Design Workspace Strategy

Finance Department (Separate Workspaces per Business Area):

Create dedicated Power BI Workspaces for each business area:

- Finance – Treasury

- Finance – GBS

- Finance – Business Finance

- Finance – CIT

- Finance – FBI

Each workspace is:

- Owned by the department/data owners

- Has clear permissions (see below)

- Used for publishing datasets, reports, dashboards

Other Departments:

- Technical BI

- Customer BI

- Other shared or cross-departmental workspaces as needed

👥 Step 3: Set Up Role-Based Access Control (RBAC)

Use Power BI roles + Azure Active Directory (AAD) Security Groups:

- Create AAD groups for each access level (viewers, contributors, admins).

- Map groups to workspace roles:

- Admin – for workspace owners

- Member – for key contributors

- Contributor – for report developers

- Viewer – for report consumers

Example (Finance – Treasury):

- Finance-Treasury-Admins

- Finance-Treasury-Contributors

- Finance-Treasury-Viewers

Benefits:

- Centralized permission management

- Easy onboarding/offboarding of users

📊 Step 4: Centralize and Govern Data Sources

Use

Shared Datasets

:

- Create certified or promoted datasets in centralized workspaces (e.g., Finance – Data Models)

- Enable reusability across business areas and reports

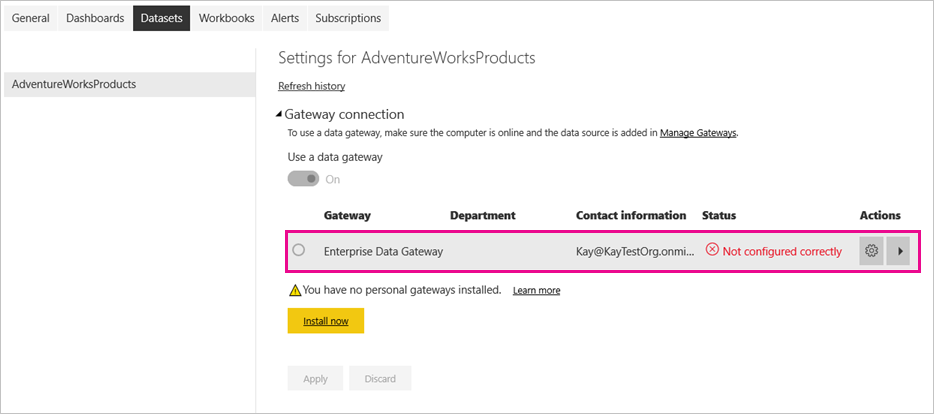

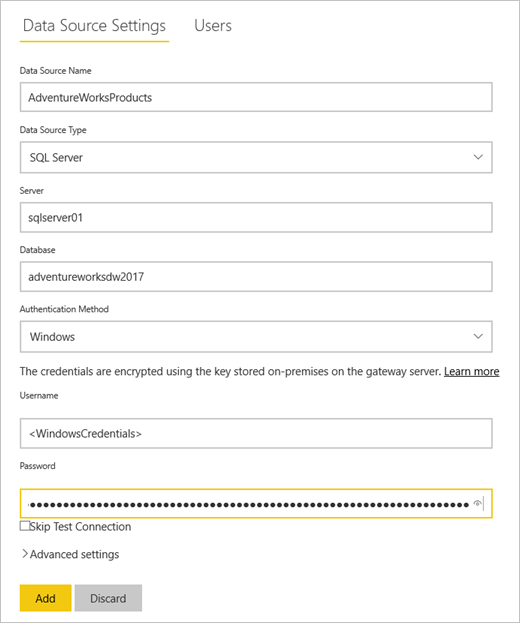

Configure Data Source Credentials:

- Use Gateway Connections for on-premises sources

- Use Managed Identity or Service Principal where possible

Define Access at Source Level:

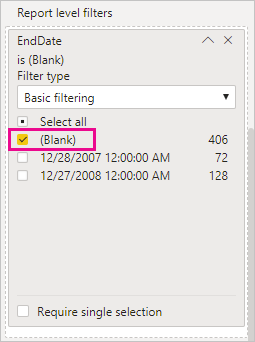

- Use Row-Level Security (RLS) in the dataset to restrict data based on user identity

- Example: RLS for business unit → only GBS users can see GBS financials

📱 Step 5: Use Apps for Distribution

Package curated content into Power BI Apps for each audience:

- Finance – Treasury App

- Finance – CIT App

- Customer BI – Insights App

Apps allow:

- Clean, user-friendly interface

- Controlled distribution

- Audience-specific access without workspace access

🛡️ Step 6: Implement Data Governance Policies

a. Use Endorsement (Certified / Promoted Datasets)

- Mark trusted datasets as certified or promoted

- Restrict who can publish certified content

b. Lineage and Impact Analysis

- Use Power BI Lineage View to track dependencies

- Before updating a dataset, see which reports are impacted

c. Data Sensitivity Labels

- Apply Microsoft Purview (formerly Information Protection) sensitivity labels

- Enforce compliance and visibility across Microsoft 365

🔍 Step 7: Monitor & Audit

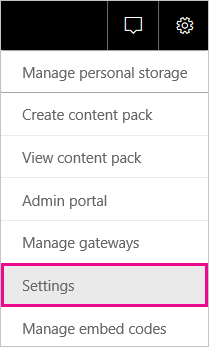

Use Power BI Admin Portal & Audit Logs:

- Audit access, sharing, and usage

- Set up usage metrics reports

- Monitor for unauthorized sharing, or stale reports

Use Power BI Activity Logs (via Microsoft 365 compliance center) for detailed tracking.

🔁 Step 8: Iterate, Scale, and Expand

- Extend the above approach to Technical BI, Customer BI, and other departments

- Create Data Domain Councils for cross-department governance

- Run quarterly reviews of permissions, stale workspaces, and compliance

🚦Sample Finance Permission Table:

Workspace

AAD Admin Group

Contributor Group

Viewer Group

Finance – Treasury

Finance-Treasury-Admins

Finance-Treasury-Contributors

Finance-Treasury-Viewers

Finance – GBS

Finance-GBS-Admins

Finance-GBS-Contributors

Finance-GBS-Viewers

Finance – Data Models

Finance-Models-Admins

Finance-Models-DataStewards

– Certified Dataset Users –

by

by