Hive LLAP in practice: sizing, setup and troubleshooting

Context

Whilst Apache Spark has commonly been used for big data processing at G-Research, we have seen increased interest in using Hive LLAP for BI dashboards and other interactive workloads. Accordingly, the Big Data Platform Engineering and Architecture teams have made LLAP available on G-Research’s Hadoop clusters. This blog post is intended to share what we have learned about deploying LLAP on mixed-use, multi-tenant clusters.

Background

Hive LLAP is an enhancement to the existing Hive on Tez execution model. It uses persistent daemons to provide an I/O layer and in-memory caching for low latency queries.

Hive Server Interactive is a thrift server that provides a JDBC interface for connecting to LLAP.

Query coordinators (Tez Application Masters) accept incoming requests and execute them via the LLAP daemons.

The daemons (which run on cluster worker nodes, and are implemented as a YARN service) handle I/O, caching, and query fragment execution. Since the daemons are long-lived processes, the container startup costs usually seen with traditional Hive on Tez queries are eliminated. In order to reduce garbage collection overhead, the daemons cache data off-heap.

Prerequisites

The information contained in this blog post has been tested with the following component versions:

- Ambari 2.7.5.0

- Hadoop 3.1.1

- Hive 3.1.0

- Ranger 1.2.0

- Tez 0.91

There are significant differences between Hadoop 3 and Hive 3 and earlier releases. For example, in Hive 3, LLAP has been migrated from Slider to the YARN services framework.

Since this blog post is about deploying Hive LLAP in production, we make some assumptions about the YARN configuration in your cluster. Firstly, we assume that preemption has been enabled. Secondly, we assume that a number of YARN configuration parameters have been set, allowing the LLAP daemons to use all the CPU and memory resources available on the machine where they run:

- yarn.nodemanager.resource.memory-mbi.e. the per-node amount of memory that can be used for YARN containers. This should be equal to the amount of RAM on the machine, minus whatever is needed by the operating system

- yarn.scheduler.maximum-allocation-mbi.e. the maximum amount of memory that a YARN container can use

- yarn.nodemanager.resource.cpu-vcores i.e. the per-node number of vCores that can be used for YARN containers. In Ambari-managed clusters, this defaults to 80% of available vCPUs, but can be tuned for specific workloads and hardware

- yarn.scheduler.maximum-allocation-vcoresi.e. the maximum number of vCPUs that a YARN container can use. This parameter is relevant when CPU isolation has been enabled, which means that YARN uses c-groups to ensure containers only use the CPU resources that have been allocated to them

Finally, we assume that your cluster uses Kerberos for authentication.

YARN Node Labels

Sizing LLAP daemons can be a complicated business. In order to simplify things, we decided to use dedicated nodes for LLAP. This means creating an exclusive YARN node label for the LLAP YARN queue.

This has a number of advantages:

- A simple scaling model, i.e. the most granular unit of LLAP capacity is an entire server

- LLAP daemons can be spawned without containers requiring preemption, e.g. LLAP can always start up in a timely manner

- Having a smaller number of large LLAP daemons (rather than a larger number of small daemons) is optimal from a performance perspective as it reduces intra-daemon network communication

- The stability and availability of LLAP is improved, because other applications cannot preempt LLAP containers

The last point is particularly important. It should be possible to prevent the daemons from being preempted by assigning the highest queue priority to LLAP. In our experience, this did not help. Even though our LLAP queue was configured so that it would never exceed its minimum capacity, and was assigned the highest priority, the daemons were still preempted from time to time. Even disabling preemption on the LLAP queue did not help. Since we always planned to implement node labels (which we knew would prevent preemption) we chose not to spend a lot of time on a root cause investigation.

A final consideration when using node labels with LLAP is YARN-9209. With this bug, the daemons ignore the configured node label. The solution is to either apply the patch, or specify a nodePartition for the YARN service. The latter is achieved by editing /usr/hdp/current/hive-server2-hive/scripts/llap/yarn/templates.py on each Hiveserver2 Interactive node. Substitute <llap node label> below for the actual LLAP node label name:

For example:

"placement_policy": {

"constraints": [

{

"type": "ANTI_AFFINITY",

"scope": "NODE",

"target_tags": [

"llap"

],

"node_partitions": [

"<llap node label>"

],

}

]

},

LLAP YARN Queue

Resources are assigned to LLAP via a dedicated YARN queue. The queue should be configured with:

- A User Limit Factor of 1, i.e. the Hive super user can consume the entire minimum capacity of the queue

- A default node label expression that specifies the LLAP node label

- 100% minimum and maximum capacity on the LLAP node label

- 0% minimum and maximum capacity on the default node label

- An explicit setting for maximum applications. This is necessary because a YARN bug means that the default maximum applications calculation only considers the queue’s capacity on the default node label (i.e. zero), meaning that no applications can run in the queue

- ACLs that allow the Hive super user to submit applications and administer the queue

In the following capacity-scheduler.xmlsnippet, the LLAP YARN queue and node label are both named llap:

yarn.scheduler.capacity.root.accessible-node-labels=llap

yarn.scheduler.capacity.root.accessible-node-labels.llap.capacity=100

yarn.scheduler.capacity.root.accessible-node-labels.llap.maximum-capacity=100

yarn.scheduler.capacity.root.llap.accessible-node-labels=llap

yarn.scheduler.capacity.root.llap.accessible-node-labels.llap.capacity=100

yarn.scheduler.capacity.root.llap.accessible-node-labels.llap.maximum-capacity=100

yarn.scheduler.capacity.root.llap.acl_submit_applications=hive

yarn.scheduler.capacity.root.llap.capacity=0

yarn.scheduler.capacity.root.llap.default-node-label-expression=llap

yarn.scheduler.capacity.root.llap.maximum-applications=20

yarn.scheduler.capacity.root.llap.maximum-capacity=0

yarn.scheduler.capacity.root.llap.minimum-user-limit-percent=100

yarn.scheduler.capacity.root.llap.ordering-policy=fifo

yarn.scheduler.capacity.root.llap.priority=100

yarn.scheduler.capacity.root.llap.state=RUNNING

yarn.scheduler.capacity.root.llap.user-limit-factor=1

Ranger Policies

User impersonation (doAs=true) is not supported with LLAP. In other words, queries run as the hive super user, instead of the user that submitted it. Consequently, hive needs HDFS access to the Hive warehouse along with any custom table locations. If HDFS Transparent Data Encryption (TDE) is in use, Hive also needs permissions to decrypt the corresponding encrypted encryption keys (EEKs).

If you intend to use the Hive Streaming API (which bypasses Hiveserver2 Interactive for performance reasons) the user running the streaming process needs permission to write to the destination partition or table location.

Suggested Ranger policies for HDFS:

- A policy that gives the hive super-user read, write, and execute access to:

- /warehouse/tablespace/managed

- /warehouse/tablespace/external

- The exec.scratchdir directory

- The HDFS aux.jars.path directory (if used)

- The location of any external tables outside the Hive warehouse

- Policies that give each cluster tenant read, write, and execute access to their databases in the Hive warehouse, e.g.

- /warehouse/tablespace/managed/hive/<db name>.db

- /warehouse/tablespace/external/hive/<db name>.db

Keep in mind that SparkSQL bypasses Hiveserver2, and connects directly to the Hive Metastore. If you are using Spark without the Hive Warehouse Connector to interact with Hive external tables, additional permissions may be required. This is because SparkSQL queries continue run as the user that submitted them.

LLAP Daemon Sizing

LLAP has a number of sizing related parameters. This section describes choosing suitable values for getting started.

Parameter | Value | Description |

tez.am.resource.memory.mb | 4096 | The amount of memory (in MB) used by the query coordinator Tez Application Masters. |

hive.server2.tez.sessions.per.default.queue | Number of concurrent queries that LLAP will support | This setting corresponds to the number of query coordinators (Tez Application Masters). |

hive.tez.container.size | Values between 4096 – 8192 are typical | Tez container size in MB |

hive.llap.daemon.num.executors | See discussion below | Number of executors per LLAP daemon |

hive.llap.io.threadpool.size | Same value as hive.llap.daemon.num.executors | Executor thread pool size |

hive.llap.daemon.yarn.container.mb | See discussion below | Total memory (in MB) used by each LLAP daemon |

hive.llap.io.memory.size | hive.llap.daemon.yarn.container.mb - llap_heap_size - llap_headroom_space | Off-heap cache size (in MB) per LLAP daemon |

llap_headroom_space | Values of 6192 – 10240 are typical | Amount of off-heap memory (in MB) used for JVM overhead (meta space, threads stack, GC data structures etc.) |

llap_heap_size | hive.llap.daemon.num.executors * hive.tez.container.size | Amount of heap memory available for executors |

num_llap_nodes_for_llap_daemons | The number of nodes assigned to the LLAP node label | Number of LLAP daemons |

Hive.auto.convert.join.noconditionaltask.size | 50% of tez.am.resource.memory.mb | Memory (in MB) used for Map joins |

tez.am.resource.memory.mb | 4096 | The amount of memory (in MB) used by the query coordinator Tez Application Masters |

Whilst the vast majority of memory resources should be allocated to the LLAP daemons (hive.llap.daemon.yarn.container.mb), some are also needed by the Tez Application Masters (the query coordinators and LLAP YARN service Application Master).

To calculate the total Tez Application Master memory requirement:

ceil((hive.server2.tez.sessions.per.default.queue + 1) / (num_llap_nodes_for_llap_daemons – node_failure_tolerence)) * tez.am.resource.memory.mb

In this case, node_failure_tolerence is the number of nodes you want to be able to lose without affecting the overall availability of LLAP.

For example, if the LLAP node label is mapped to two nodes, and you want LLAP to survive the loss of one of them (e.g. n+1 redundancy):

ceil((4 + 1) / (2 - 1)) * 4096 = 20,480 MB

yarn.nodemanager.resource.memory-mb(per-node memory used for YARN containers) minus 20,480 MB is the maximum size of an LLAP daemon.

For example, if yarn.nodemanager.resource.memory-mb is 288 GB, the LLAP daemon can be up to 268 GB (294,912 – 20,480 = 274,432). Note, however, that yarn.scheduler.maximum-allocation-mb must be configured to allow containers of this size.

Generally speaking, the number of executors (hive.llap.daemon.num.executors) should be equal to yarn.nodemanager.resource.cpu-vcores (per-node vCores for YARN containers). However, on machines with high core counts this may not be practical. For example, if a node has 56 vcores and hive.tez.container.size is 4 GB, the executors alone would require 224 GB of memory, potentially leaving very little memory for the cache (hive.llap.io.memory.size). Under these circumstances, you can adjust the number of executors down, essentially achieving a larger cache at the expense of reduced task parallelism.

LLAP Application Health Threshold

In Ambari-managed clusters, the LLAP YARN service is configured with a yarn.service.container-health-threshold.percent of 80% and yarn.service.container-health-threshold.window-secs of 300 seconds, i.e. if less than 80% of LLAP containers (daemons) are in a READY state for five minutes, the service is considered unhealthy and is automatically stopped. In other words, with one daemon per host, you will need a minimum of five hosts in your node label for high availability. For node labels consisting of fewer than five hosts, reduce yarn.service.container-health-threshold.percent. For example, with two hosts, a suitable threshold is 50%.

Unfortunately, Ambari does not currently allow for the customisation of yarn.service.container-health-threshold.percent via its UI. However, it is possible to edit /usr/hdp/current/hive-server2-hive/scripts/llap/yarn/templates.pyon the Hiveserver2 Interactive host in order to override the default value.

{

"name": "%(name)s",

"version": "1.0.0",

"queue": "%(queue.string)s",

"configuration": {

"properties": {

"yarn.service.rolling-log.include-pattern": ".*\\\\.done",

"yarn.service.container-health-threshold.percent": "50",

"yarn.service.container-health-threshold.window-secs": "%(health_time_window)d",

"yarn.service.container-health-threshold.init-delay-secs": "%(health_init_delay)d"%(service_appconfig_global_append)s

}

},

Anti-Affinity and Node Labels

The LLAP YARN service is configured with an anti-affinity placement policy, which specifies that there should be no more than one LLAP daemon per node. Unfortunately, there is a YARN bug (YARN-10034), where allocation tags are not removed when a node is decommissioned.

For example, you might temporarily remove some LLAP nodes from the cluster in order to perform maintenance on them. Whilst doing so, you are careful to make sure that the number of daemons does not fall below the health threshold described previously. However, because of the bug, no new LLAP containers can run on the nodes once they are back in service because of the stale allocation tags.

The solution is to either apply the patch, or restart Resource Manager, causing the allocation tags to be removed.

The LLAP Monitor (more details soon) provides a /status endpoint that details the number of active, launching, and desired containers. This information can be used to alert whenever active + launching containers are below desired for an extended period of time.

Hiveserver2 Interactive High Availability

Hiveserver2 Interactive (HSI) supports high availability (HA) in the form of an Active/Passive standby configuration. Only one HSI can be in Active mode, whilst one or more additional HSI instances are in passive standby mode and ready to takeover on Active HSI failure.

To connect to the active leader HSI instance, clients use dynamic service discovery. For example:

jdbc:hive2://<zookeeper_quorum>;serviceDiscoveryMode=zooKeeperHA;zooKeeperNamespace=hiveserver2-interactive

It is important to note that zooKeeperHAservice discovery mode is distinct from zooKeeper discovery mode used with traditional Hiveserver2.

With zooKeeperHA, the Hive connection will iterate through the list of HSI instances registered in ZooKeeper and identify the elected leader. The node information (hostname:port) for the leader is returned to the client, allowing it to connect to the Active HSI instance.

If the Active HSI loses its leadership (for example, a long GC pause resulting in session/connection timeout or network split) leadership is revoked.

Before implementing HSI HA you should confirm that all JDBC and ODBC drivers used within your organization include support for zooKeeperHA service discovery.

We found that whilst the Hive JDBC driver does have support for zooKeeperHA, our chosen ODBC driver did not.

Unfortunately, running in a mixed-mode with some clients using service discovery and others making a direct connection to HSI is not a good option. As noted previously, leadership changes can occur in response to long GC pauses and so on, and any clients using a direct connection will encounter errors when attempting to interact with a passive HSI instance.

Fetch Task Conversion

Hive includes a performance optimisation where it converts certain kinds of simple queries into fetch tasks. Fetch tasks are direct HDFS accesses and are intended to improve performance by avoiding the overhead of generating a Tez Map task.

During lab testing, we found it was necessary to disable this feature due to compatibility problems with ACID tables and compaction.

Our test involved a simple NiFi flow using the PutHive3Streaming processor to write data to a managed table. During the test, we observed that simple queries against the table being written to failed with the following error whenever a compaction was running:

org.apache.hive.service.cli.HiveSQLException: java.io.IOException: java.io.FileNotFoundException:File does not exist: /warehouse/tablespace/managed/hive/acid_test.db/test/delta_0000783_0000783/bucket_00000 at

org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:86) at

org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:76) at

org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getBlockLocations(FSDirStatAndListingOp.java:158) at

org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:1931) at

org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getBlockLocations(NameNodeRpcServer.java:738) at

org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getBlockLocations(ClientNamenodeProtocolServerSideTranslatorPB.java:426) at

org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at

org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:524) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1025) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:876) at

org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:822) at

java.security.AccessController.doPrivileged(Native Method) at

javax.security.auth.Subject.doAs(Subject.java:422) at

org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) at

org.apache.hadoop.ipc.Server$Handler.run(Server.java:2682)

Compactions in Hive occur in the background and are not meant to prevent concurrent reads and writes of the data. After a compaction, the system waits until all readers of the old files have finished and then removes the old files.

However, since fetch tasks involve direct HDFS access, they bypass the locking that allows the concurrent reads and writes of the data to occur. This was resolved by effectively disabling fetch conversion, i.e. setting hive.fetch.task.conversion to none. This issue is not actually specific to LLAP and also applies to traditional Hive on Tez.

Troubleshooting

Troubleshooting LLAP is a big subject. This section intends to offer some brief pointers on getting started.

Resource Manager UI

You can use the Resource Manager UI to see what applications are running in the LLAP queue.

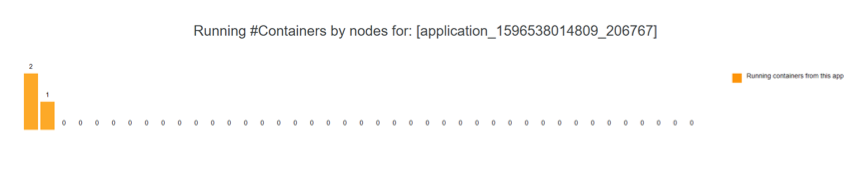

The LLAP application should have n+1 containers, where n is the number of LLAP daemons and the additional container is its Application Master. By default, the application is named llap0.

In addition, you can expect to see n Tez Application Masters where n is the value of hive.server2.tez.sessions.per.default.queue.

The following example shows an LLAP application with two daemons, and thus three containers.

LLAP Status

You can check the status of the LLAP daemons with the following command:

hive –service llapstatus

YARN Application Logs

As described previously, we initially encountered problems with the LLAP daemons being preempted. This could sometimes end in a complete LLAP outage, either because every daemon was preempted, or because YARN stopped the LLAP application once the number of daemons fell below the health threshold. When this happened, YARN logs were essential for identifying the route cause.

For example, clients and Hiveserver2 Interactive would report error stacks, noting that No LLAP Daemons are running:

2020-06-15T14:14:33,549 ERROR [HiveServer2-Background-Pool: Thread-15585]: SessionState (SessionState.java:printError(1250)) - Status: Failed

2020-06-15T14:14:33,549 ERROR [HiveServer2-Background-Pool: Thread-15585]: SessionState (SessionState.java:printError(1250)) - Dag received [DAG_TERMINATE, SERVICE_PLUGIN_ERROR] in RUNNING state.

2020-06-15T14:14:33,549 ERROR [HiveServer2-Background-Pool: Thread-15585]: SessionState (SessionState.java:printError(1250)) - Error reported by TaskScheduler [[2:LLAP]][SERVICE_UNAVAILABLE]

No LLAP Daemons are running

To understand why there were no daemons running, we would consult the LLAP Application Master YARN log:

yarn logs –applicationId <application-id> -am ALL

In this example, we can see that the daemon was killed by YARN because the container grew beyond the configured physical memory limit:

2020-05-28 08:46:20,302 [Component dispatcher] INFO component.Component - [COMPONENT llap] Submitting scheduling request: SchedulingRequestPBImpl{priority=0, allocationReqId=0, executionType={Execution Type: GUARANTEED, Enforce Execution Type: true}, allocationTags=[llap], resourceSizing=ResourceSizingPBImpl{numAllocations=1, resources=<memory:86016, vCores:1>}, placementConstraint=notin,node,llap}

2020-05-28 08:46:20,303 [Component dispatcher] ERROR instance.ComponentInstance - [COMPINSTANCE llap-4 : container_e497_1589791514056_134237_01_000012]: container_e497_1589791514056_134237_01_000012 completed. Reinsert back to pending list and requested a new container.

exitStatus=-104, diagnostics=[2020-05-28 08:46:07.666]Container [pid=59325,

containerID=container_e497_1589791514056_134237_01_000012] is running 73252864B beyond the 'PHYSICAL' memory limit. Current usage: 84.1 GB of 84 GB physical memory used; 85.8 GB of 176.4 GB virtual memory used. Killing container.

Dump of the process-tree for container_e497_1589791514056_134237_01_000012 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 59334 59332 59325 59325 (java) 845289 434316 91843993600 22037209 /etc/alternatives/java_sdk/bin/java -Dproc_llapdaemon -Xms32768m -Xmx65536m -Dhttp.maxConnections=17 -XX:CompressedClassSpaceSize=2G -XX:+AlwaysPreTouch -XX:+UseG1GC -XX:TLABSize=8m -XX:+ResizeTLAB -XX:+UseNUMA -XX:+AggressiveOpts -XX:MetaspaceSize=1024m -XX:InitiatingHeapOccupancyPercent=80 -XX:MaxGCPauseMillis=200 -XX:MetaspaceSize=1024m -server -Djava.net.preferIPv4Stack=true -XX:+UseNUMA -XX:+PrintGCDetails -verbose:gc -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=4 -XX:GCLogFileSize=100M -XX:+PrintGCDateStamps -Xloggc:/hadoop/ssd2/hadoop/yarn/log/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/gc_2020-05-23-11.log -Djava.io.tmpdir=/hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/tmp/ -Dlog4j.configurationFile=llap-daemon-log4j2.properties -Dllap.daemon.log.dir=/hadoop/ssd2/hadoop/yarn/log/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012 -Dllap.daemon.log.file=llap-daemon-hive-worker81.log -Dllap.daemon.root.logger=query-routing -Dllap.daemon.log.level=INFO -classpath /hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/lib/conf/:/hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/lib//lib/*:/hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/lib//lib/tez/*:/hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/lib//lib/udfs/*:.: org.apache.hadoop.hive.llap.daemon.impl.LlapDaemon

|- 59325 59323 59325 59325 (bash) 0 0 118067200 368 /bin/bash -c /hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/lib/bin//llapDaemon.sh start &> /hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/tmp//shell.out 1>/hadoop/ssd2/hadoop/yarn/log/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/stdout.txt 2>/hadoop/ssd2/hadoop/yarn/log/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/stderr.txt

|- 59332 59325 59325 59325 (bash) 0 0 118071296 403 bash /hadoop/ssd2/hadoop/yarn/local/usercache/hive/appcache/application_1589791514056_134237/container_e497_1589791514056_134237_01_000012/lib/bin//llapDaemon.sh start

[2020-05-28 08:46:07.676]Container killed on request. Exit code is 143

[2020-05-28 08:46:20.212]Container exited with a non-zero exit code 143.

In cases where the LLAP application id is no longer available in the Resource Manger UI, it can be obtained from hiveserver2interactive.log:

grep “query will use” /var/log/hive/hiveserver2Interactive.log | tail -1

2020-09-02T14:32:05,388 INFO [ATS Logger 0]: hooks.ATSHook (ATSHook.java:determineLlapId(473)) - The query will use LLAP instance application_1596538014809_818483 (@llap2)

It is worth noting that the LLAP daemons write a log file for each query they run. These log files are managed by YARN log aggregation in the usual manner, which means that over time they can grow extremely large. This can make diagnosing problems with the daemons a challenge; dumping the logs to disk can require hundreds of gigabytes of disk space, and take many hours to complete. On the other hand, the Application Master log is always a manageable size.

Hiveserver2 Interactive Web UI

The Hiveserver2 Interactive Web UI provides configuration, logging, metrics and active session information and by default is accessible on port 15002.

It is configured via the following parameters in hive-interactive-site.xml:

hive.users.in.admin.role=<comma-separated list of users>

hive.server2.webui.spnego.keytab=/etc/security/keytabs/spnego.service.keytab

hive.server2.webui.spnego.principal=HTTP/_HOST@EXAMPLE.COM

hive.server2.webui.use.spnego=true

The following table details the available UI pages and useful endpoints for metric scraping etc.

http://<host>:15002 | Active sessions, open queries, and last 25 closed queries overview |

http://<host>:15002/jmx | Hiveserver2 system metrics |

http://<host>:15002/conf | Current Hiveserver2 configuration |

http://<host>:15002/peers | Overview of Hiveserver2 Interactive instances in the cluster |

http://<host>:15002/stacks | Show a stack trace of all JVM threads |

http://<host>:15002/llap.html | Status of the LLAP daemons |

Text | http://<host>:15002/conflog |

LLAP Monitor

Each LLAP daemon has a Monitor that listens on port 15002 by default. You can use the LLAP status command or the LLAP Daemonstab on the Hiveserver2 Interactive Web UI to quickly determine where the LLAP daemons are running.

The following table details the available UI pages and useful endpoints for metric scraping etc.

http://<host>:15002 | Heap, cache, executor, and system metrics overview |

http://<host>:15002/conf | Current daemon configuration |

http://<host>:15002/peers | Overview of LLAP nodes in the cluster (obtained from ZooKeeper) |

http://<host>:15002/iomem | Cache contents and details usage |

http://<host>:15002/jmx | Daemon system metrics |

http://<host>:15002/stacks | Show a stack trace of all JVM threads |

http://<host>:15002/conflog | Current log4j log level |

http://<host>:15002/status | Status of the LLAP daemon |

LLAP IO Counters

Set hive.tez.exec.print.summary to true in order to report data and metadata cache hits and misses for each query you run.

Future Work

There are still a number of interesting LLAP features that we are yet to explore, and will be the subject of future work.

SSD Cache

LLAP includes an SSD cache, which allows RAM and SSD to be combined into a single large pool. With the SSD cache, we should be able to cache even more data. This will cause some of the sizing calculations detailed in this blog post to change, as a portion of memory will be needed to store SSD cache metadata.

Workload Management

LLAP workload management can help to minimize the impact of “noisy neighbours” in our multi-tenant cluster. For example, we can define triggers that kill long-running or resource-hungry queries, or move them to a different resource pool.

Austin Hackett, Big Data Platform Engineer

Stay up to-date with G-Research

Subscribe to our newsletter to receive news & updates

You can click here to read our privacy policy. You can unsubscribe at anytime.